In the past decade, breakthroughs of Artificial Intelligence (AI) in its multiple sub-area have made new applications in various domains possible. One typical yet essential example is the public health domain. There are many challenges for humans in our never-ending battle with diseases. Among them, problems involving harnessing data with network structures and future planning, such as disease control or resource allocation, demand effective solutions significantly. However, unfortunately, some of them are too complicated or unscalable for humans to solve optimally. This thesis tackles these challenging sequential network planning problems for the public health domain by advancing the state-of-the-art to a new level of effectiveness.

In particular, My thesis provides three main contributions to overcome the emerging challenges when applying sequential network planning problems in the public health domain, namely (1) a novel sequential network-based screening/contact tracing framework under uncertainty, (2) a novel sequential network-based mobile interventions framework, (3) theoretical analysis, algorithmic solutions and empirical experiments that shows superior performance compared to previous approaches both theoretically and empirically.

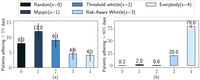

More concretely, the first part of this thesis studies the active screening problem as an emerging application for disease prevention. I introduce a new approach to modeling multi-round network-based screening/contact tracing under uncertainty. Based on the well-known network SIS model in computational epidemiology, which is applicable for many diseases, I propose a model of the multi-agent active screening problem (ACTS) and prove its NP-hardness. I further proposed the REMEDY (REcurrent screening Multi-round Efficient DYnamic agent) algorithm for solving this problem. With a time and solution quality trade-off, REMEDY has two variants, Full- and Fast-REMEDY. It is a Frank-Wolfe-style gradient descent algorithm realized by compacting the representation of belief states to represent uncertainty. As shown in the experiment conducted, Full- and Fast-REMEDY are not only being superior in controlling diseases to all the previous approaches; they are also robust to varying levels of missing

information in the social graph and budget change, thus enabling

the use of our agent to improve the current practice of real-world

screening contexts.

The second part of this thesis focuses on the scalability issue for the time horizon for the ACTS problem. Although Full-REMEDY provides excellent solution qualities, it fails to scale to large time horizons while fully considering the future effect of current interventions. Thus, I proposed a novel reinforcement learning (RL) approach based on Deep Q-Networks (DQN). Due to the nature of the ACTS problem, several challenges that the traditional RL can not handle have emerged, including (1) the combinatorial nature of the problem, (2) the need for sequential planning, and (3) the uncertainties in the infectiousness states of the population. I design several innovative adaptations in my RL approach to address the above challenges. I will introduce why and how these adaptations are made in this part.

For the third part, I introduce a novel sequential network-based mobile interventions framework. It is a restless multi-armed bandits (RMABs) with network pulling effects. In the proposed model, arms are partially recharging and connected through a graph. Pulling one arm also improves the state of neighboring arms, significantly extending the previously studied setting of fully recharging bandits with no network effects. Such network effect may arise due to regular population movements (such as commuting between home and work) for mobile intervention applications. In my thesis, I show that network effects in RMABs induce strong reward coupling that is not accounted for by existing solution methods. I also propose a new solution approach for the networked RMABs by exploiting concavity properties that arise under natural assumptions on the structure of intervention effects. In addition, I show the optimality of such a method in idealized settings and demonstrate that it empirically outperforms state-of-the-art baselines.